What Buyers Experience First

Early interactions with AI tools are often highly controlled. Vendors showcase curated use cases, stable data, and ideal prompts. During trials:

- Outputs appear consistently strong

- Latency is minimal

- Error cases are rare

- The tool feels “production-ready”

Expectations are set quickly, often before real usage begins.

Why Demos Are Optimized Over Reliability

Demos shape buying decisions more than long-term performance.

Sales cycles reward immediate impact. A compelling demo closes deals; reliability issues emerge later, often after contracts are signed.

Reliability is expensive and invisible.

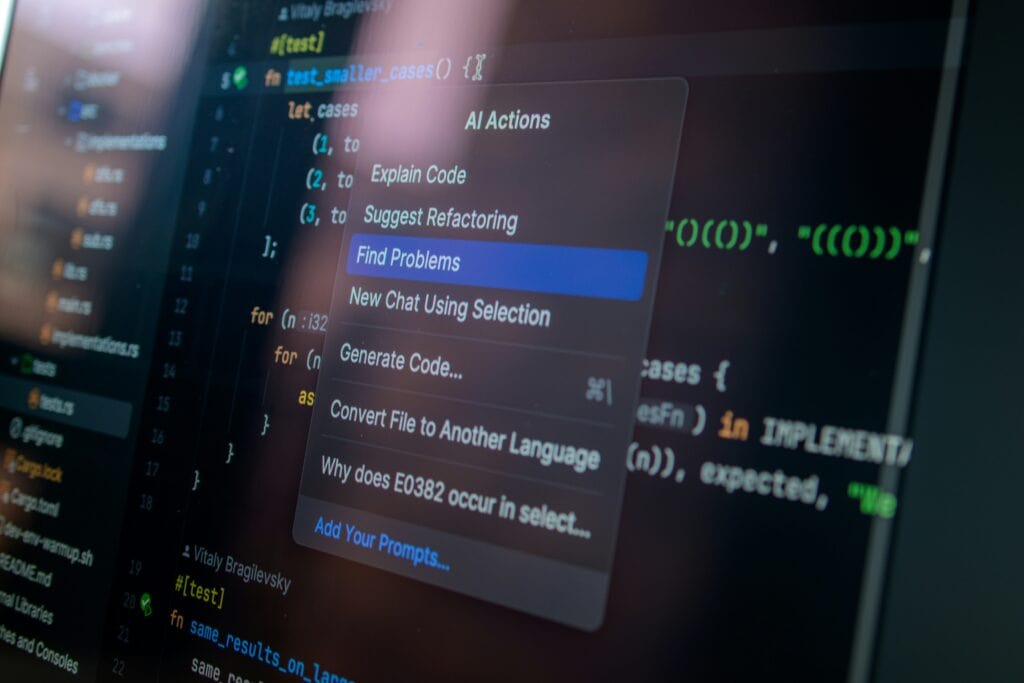

Building for consistency requires monitoring, fallback systems, testing across edge cases, and human oversight. These investments don’t show up clearly in demos.

AI performance is probabilistic by nature.

Unlike traditional software, AI outputs vary. Demonstrations hide this variability by narrowing scope and inputs, creating a false sense of stability.

Market pressure favors speed over durability.

Vendors compete on features and perceived capability. Slowing down to harden systems risks falling behind competitors who prioritize rapid iteration.

In mature vendors serving regulated or mission-critical environments, reliability does receive greater emphasis—but this typically comes later in the product lifecycle and at higher cost.

How This Manifests After Deployment

Once deployed, users encounter inconsistent behavior. Edge cases appear. Latency fluctuates. Confidence erodes as the gap between demo expectations and real performance becomes clear.

Teams compensate by adding review steps, limiting use cases, or reverting to manual workflows for critical tasks.

Impact on Adoption and Trust

Reliability issues undermine adoption more than outright failure. Users don’t stop using the tool immediately; they simply stop depending on it. AI becomes optional rather than integral.

Over time, trust declines. Expansion slows. Organizations hesitate to scale usage, and renewal discussions shift from growth to risk management.

This dynamic also reinforces skepticism toward future AI purchases, even from different vendors.

What This Means

Demos prove possibility, not dependability. Until incentives shift toward long-term reliability rather than short-term persuasion, this gap will continue to shape AI adoption outcomes.

Confidence: High

Why: This pattern is consistently observed across AI sales cycles, post-deployment reviews, and customer feedback, regardless of model quality or vendor size.